Now that we have all the hardware (Server, USB3 disk cabinet and disks) we need to set it all up! This post will guide you through installing Ubuntu, setting up the storage and configuring the Minio S3 backend including how to deal with multiple tenants.

This post is part of a multi-part article.

You can find the main index here

Connecting the hardware

Physically installing the server is quite easy. Just take the server and the disk cabinet out of the box and connect their adapters. Then connect them together with the supplied USB3 cable and insert the disks into the disk cabinet.

I strongly advise to hook up the server with a cabled connection to the internet. WiFi is fine and all but for something that’s going to stay in one place, a cable is always better.

Once that is all done, turn it on and boot into the supplied Windows 10 to see if everything is working.

Create a Ubuntu bootable USB stick

If everything is working, using the system (or a different PC), download the Ubuntu 16.04 LTS Server netinstall ISO image and download a tool called Rufus. Use Rufus to flash a USB stick (min 64MB) with the Netinstall ISO.

*I don’t have the hardware yet to test. It might be that the Apollo Lake CPU is to new for Ubuntu 16.04 and might require the newest 17.04 version. Once I’m able to test I’ll update this section!

Boot from USB stick to install Ubuntu

*Since I don’t currently have the hardware yet and it’s hard to take a screenshot of a physical screen, all next steps are performed in a virtual environment, any changes necessary will be updated in the future!

Video Linux Install

Since I’ve made this article I have also made a video of installing Linux on the Mele PCG35 Apo! Check it out here:

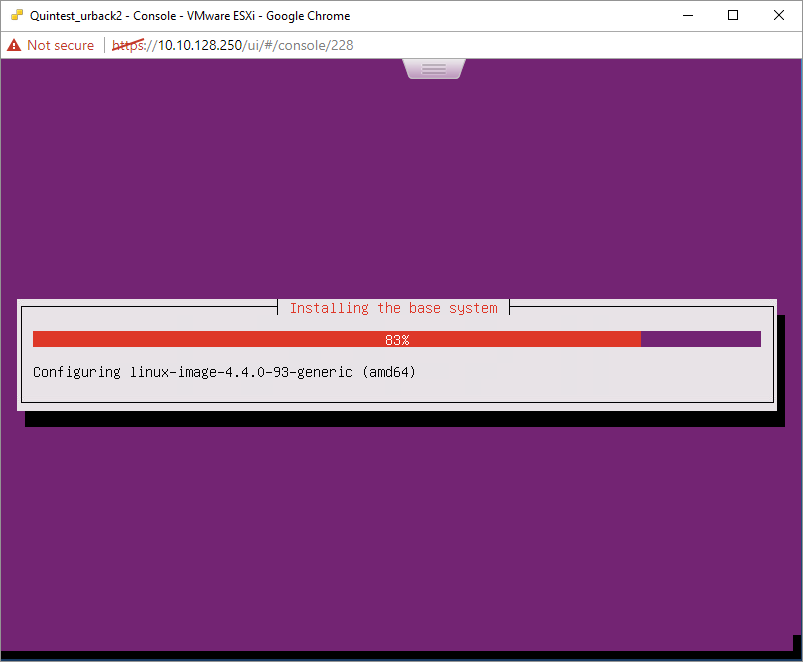

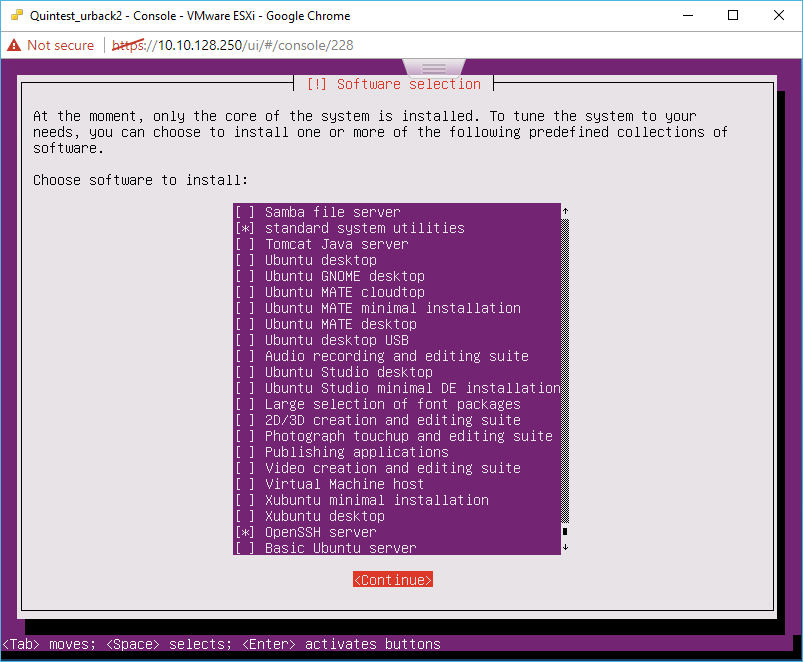

Installing Ubuntu to internal 32GB eMMC

Insert/Mount the USB stick/ISO and boot the server from it.

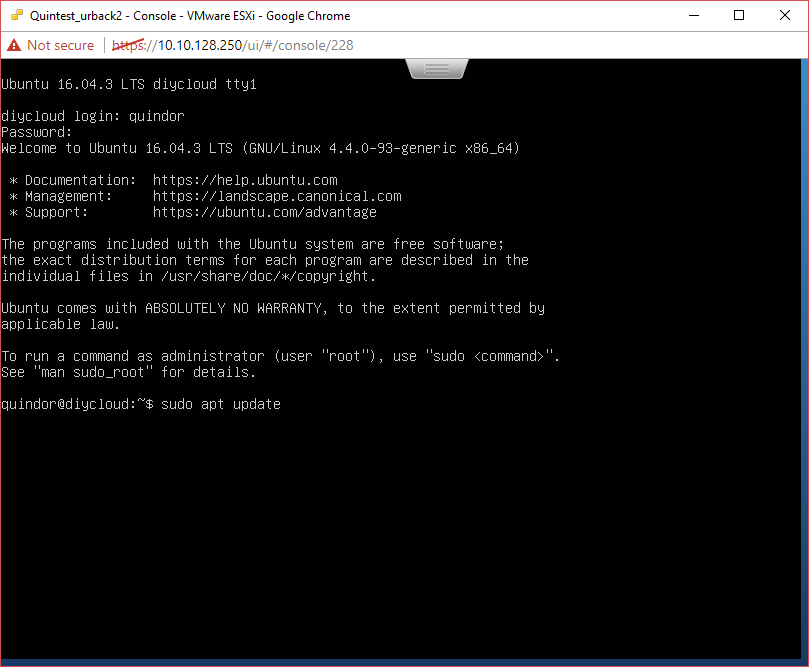

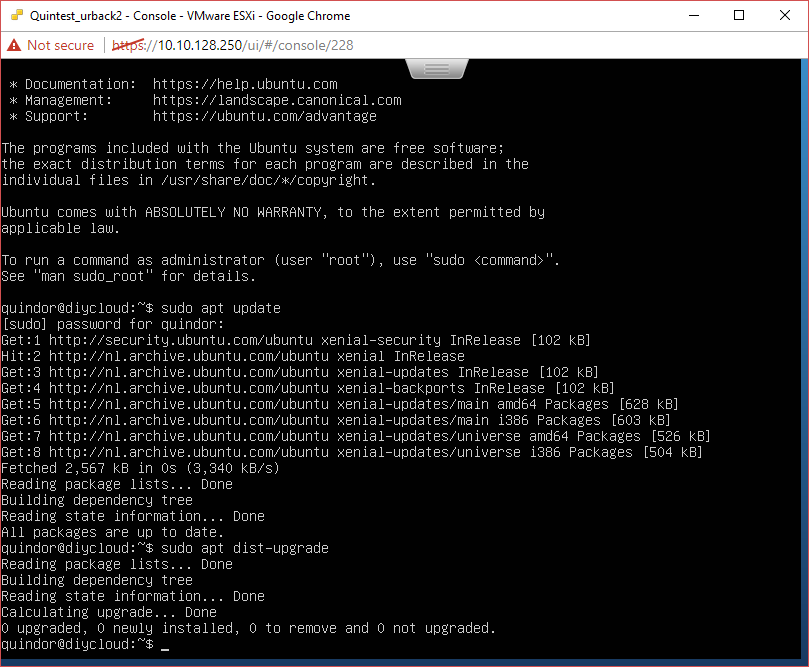

After first boot, install basic packages

Once booted we need to be make sure all software is up-to-date, run the following commands to make sure

sudo apt update sudo apt-dist upgrade

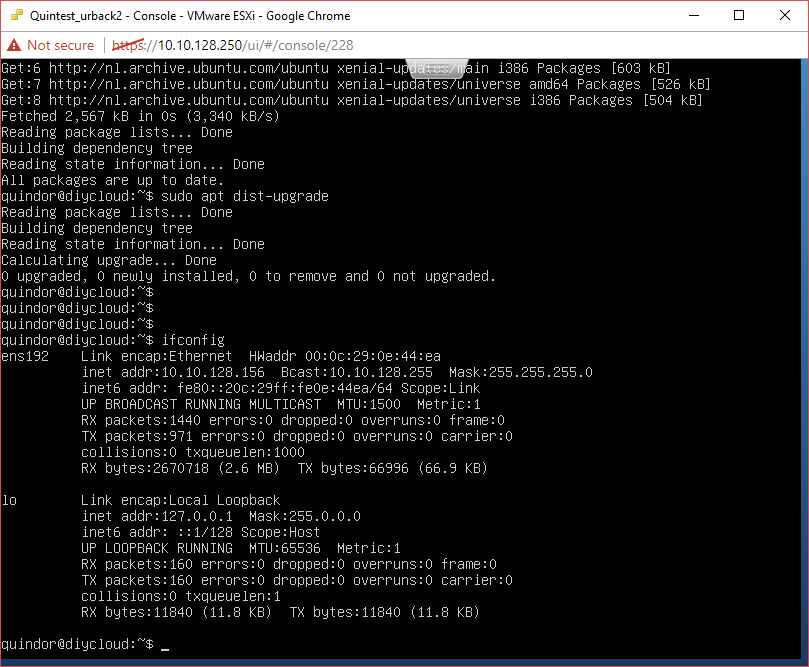

Finding the current DHCP address

Since this is a 24Hr server which is going to need some firewall port-maps to function remotely we need it to have a set IP address instead of a DHCP address to make sure it doesn’t change over time.

ifconfig

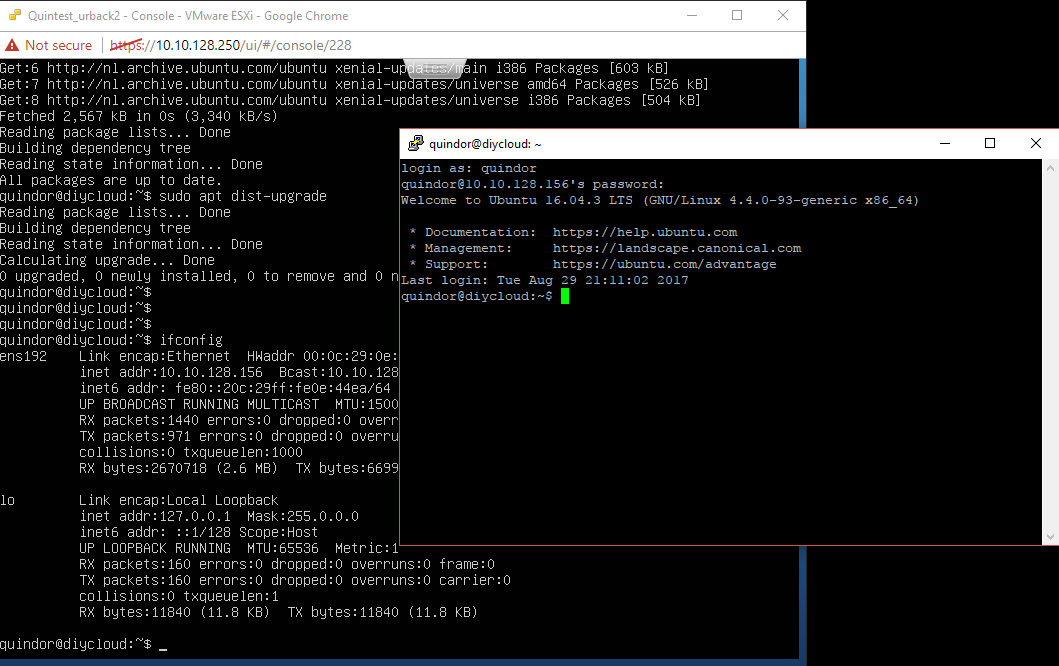

Connect to the machine using an SSH client like Putty.

Installing needed packages

We need to get some packages that we’re going to use on the server. These are just the bare basics. In another post I’ll be discuss adding more advanced functions such as monitoring and other extra’s.

To install the packages, run the following command:

sudo apt install dstat htop nano zfsutils-linux

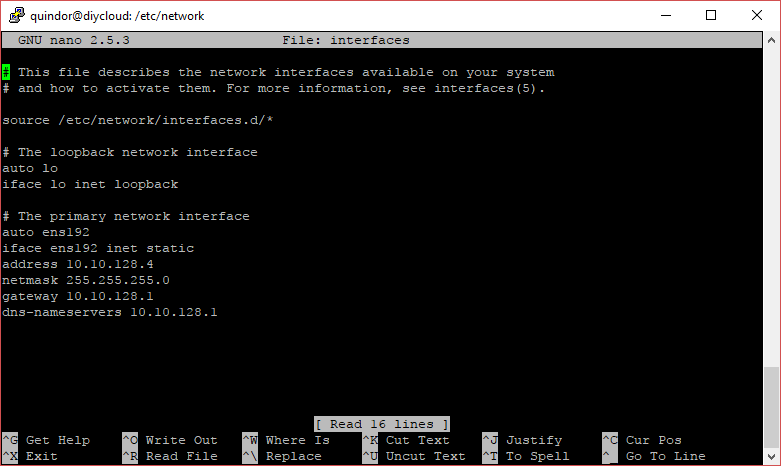

Setting a new dedicated IP

To set a new dedicated IP, do the following:

cd /etc/network sudo nano interfaces

Edit the interfaces file to contain the below listed text. Caution, the ensl92 interface is what the network interface in my VM is called, in your case there should be a different name, make sure to use that! Edit the file to contain the following instead of “auto”.

auto ensl92 iface ensl92 inet static address ip.ip.ip.ip netmask 255.255.255.0 gateway ip.of.firewall.here dns-nameservers ip.of.firewall.here

Again, make sure to use the network card name and settings appropriate for your network! When done correctly it should look something like this:

After this, reboot the system using and connect with SSH to the newly set IP.

sudo reboot

Configuring the storage

As I mentioned in the previous article I’m going to be using 3x 10TB in a RAIDz1 because I don’t except too much random I/O and I want to make the most of the available disk space.

Since it’s for backup purposes only, if the server would fail because 2 HDD’s failed at the same time and all backup data would be lost, it’s highly unlikely that the original data would also be lost at exactly the same time. So in my opinion it’s a calculated risk to save some costs.

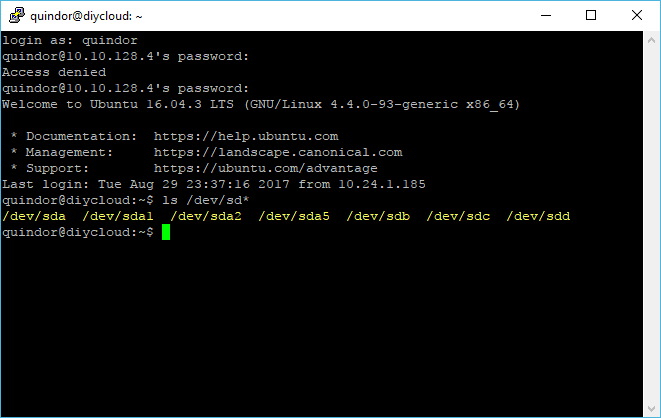

First, let’s check what available storage devices we have:

We’re going to create a RAIDz1 out of SDB, SDC and SDD. Run the commands below to create the RAIDz1 volume:

sudo zpool create backupstorage raidz1 -f -o ashift=12 /dev/sdb /dev/sdc /dev/sdd sudo zpool status -v backupstorage

After that it should look something like this:

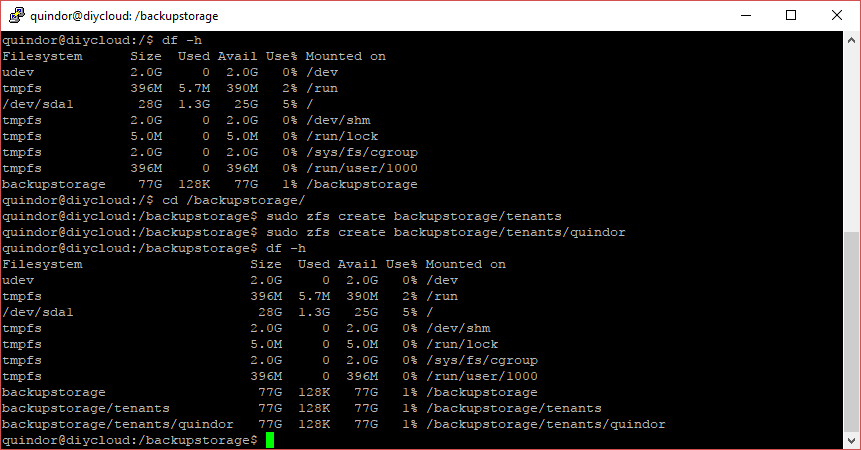

Creating ZFS datasets

To be able to manage different “directories” or datasets and keep space divided between tenants we’re going to create 2 datasets:

sudo zfs create backupstorage/tenants sudo zfs create backupstorage/tenants/quindor

Setting a quota on a dataset

To restrict users from using too much space we’re going to use ZFS quotas on the individual datasets. To set a quota on the quindor user we just created run the following command:

sudo zfs set quota=50G backupstorage/tenants/quindor

With this command the tenant Quindor will be restricted to using 50GB of data. Since these are soft quotas put on top of a larger file system they can be easily adjusted later on if needed.

The Minio S3 storage backend

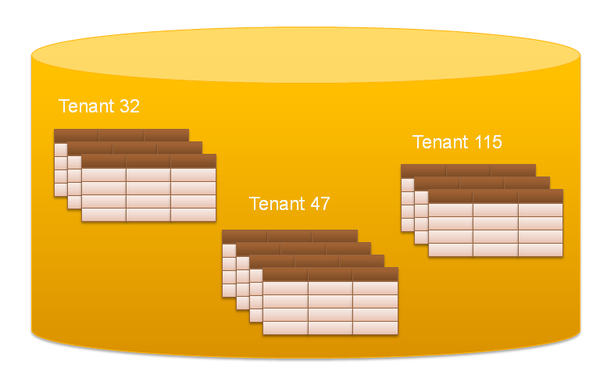

We’ll be using a Minio S3 object storage server per tenant.

Separating and securing Tenants

For each tenant we’ll be running a separate instance of the Minio S3 backend storage server. Each instance will have it’s own TCP port, private Access key and Secret key, separating environments between clients.

Security through encryption, a trust-no-one scenario

Each tenant will be able to create buckets in their instance through which they can use multiple clients within their tenant account. That would mean that a client within the tenant in theory could access the data of another client, or that the server administrator could look inside of all the backups.

To solve that problem, the Duplicati client software is able to perform client-side encryption. By using a different encryption key per client, no one but the possessor of that encryption key will be able to open and view the data, including other clients in the same tenant account or even the server administrator.

The data structure of Duplicati even prevents the other clients or server administrator from viewing file names or metadata since everything will be stored in deduplicated and compressed block archives on the server.

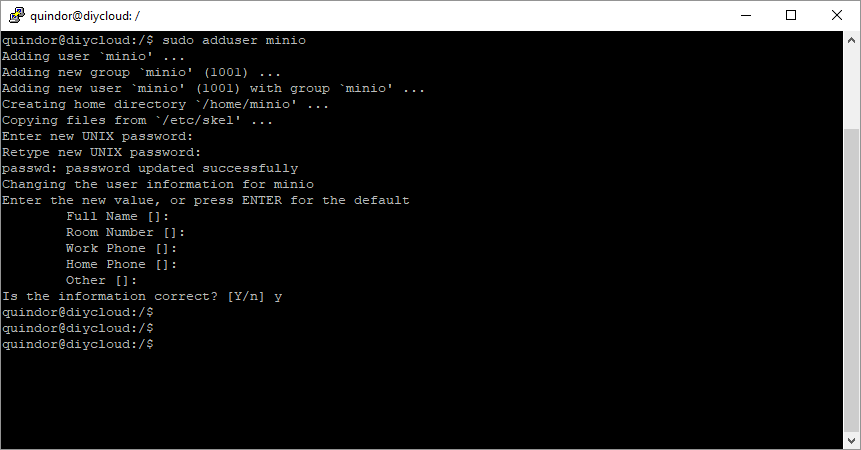

Installing Minio for the Quindor tenant

First we need to some configuration steps and then download Minio. Execute the following commands:

sudo adduser minio

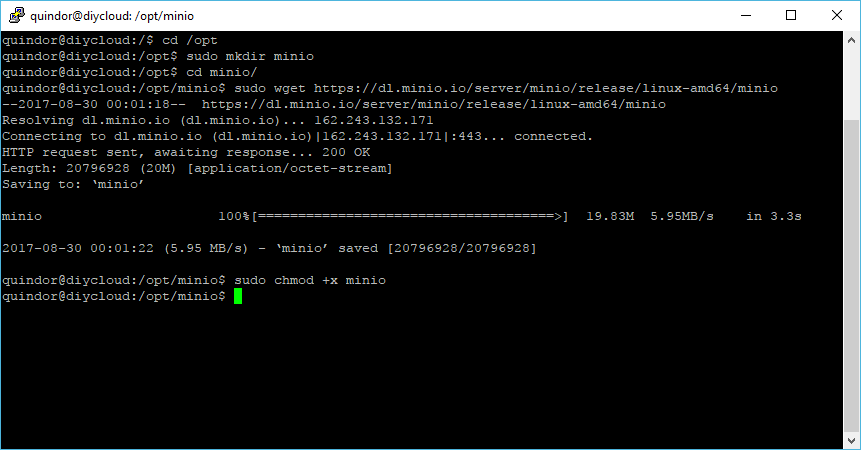

Next we need to create some directories and set permissions:

sudo mkdir /opt/minio sudo mkdir /opt/minio/tenant-configs sudo mkdir /opt/minio/tenant-configs/quindor sudo chown -R minio:minio /opt/minio/ sudo chown -R minio:minio /backupstorage/tenants/

Once that is done, we’re going to download the Minio software:

sudo su minio cd /opt/minio wget https://dl.minio.io/server/minio/release/linux-amd64/minio chmod +x minio

Starting a test instance of Minio

Next we’re going to start a test instance of Minio for the Quindor tenant.

While logged in as the minio user inside of the /opt/minio directory, execute the following command:

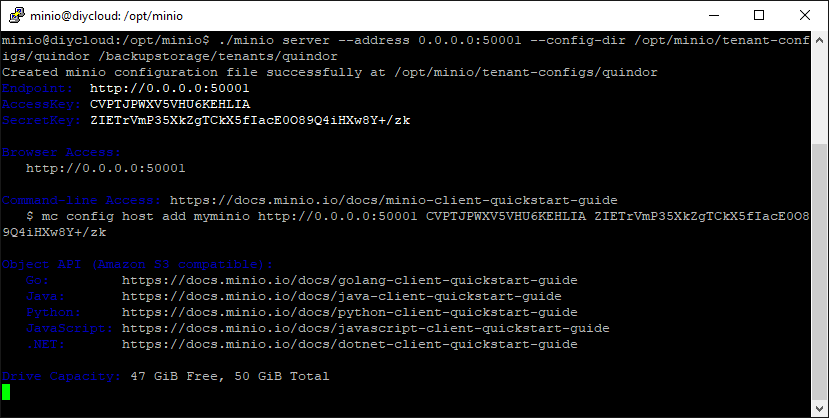

./minio server --address 0.0.0.0:50001 --config-dir /opt/minio/tenant-configs/quindor /backupstorage/tenants/quindor

After starting and all goes right, the following will display on the screen:

Starting the Minio server for a specific tenant the first time generates their Access and Private key. Note them down because you are going to need to pass them on to them and to test the server.

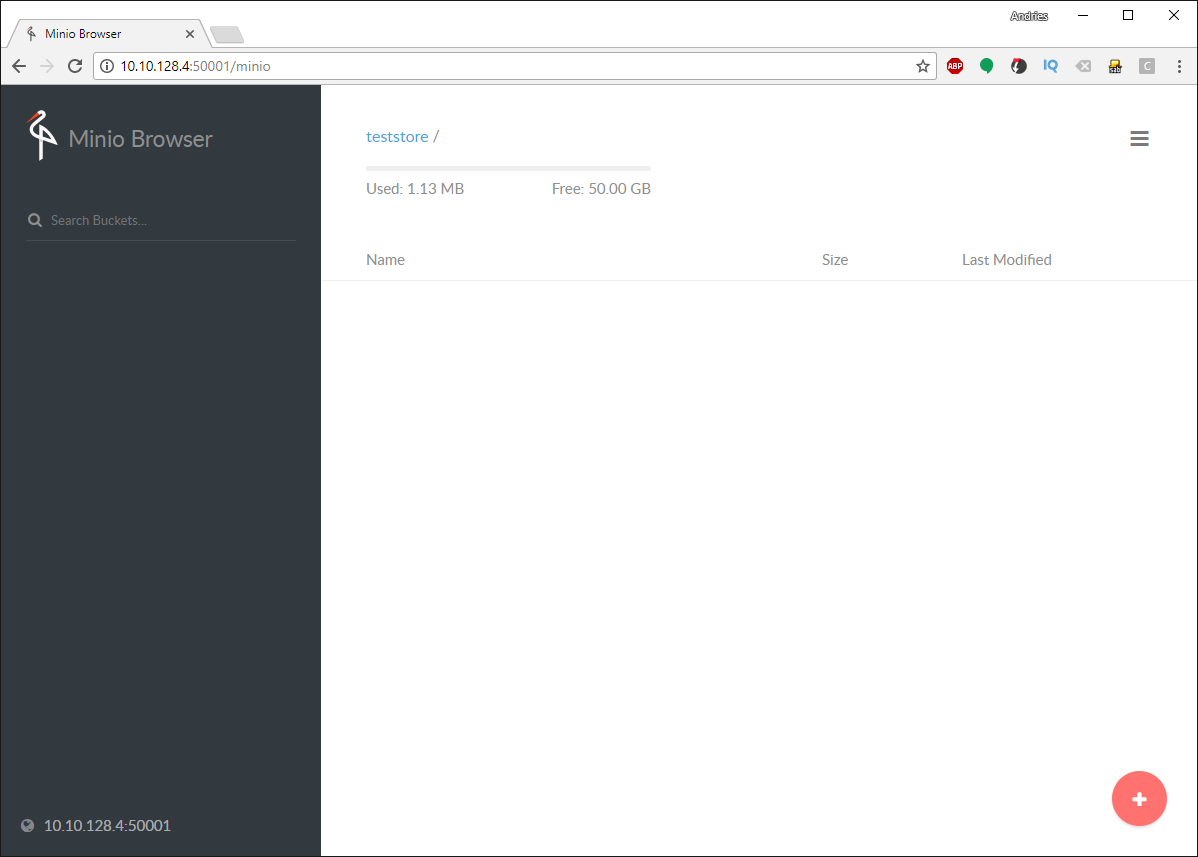

Take a webbrowser and go to the static address you gave the machine on the port you started Minio (in this case 50001). Once there, fill in the keys to login.

Once logged in we’re greeted with the following screen:

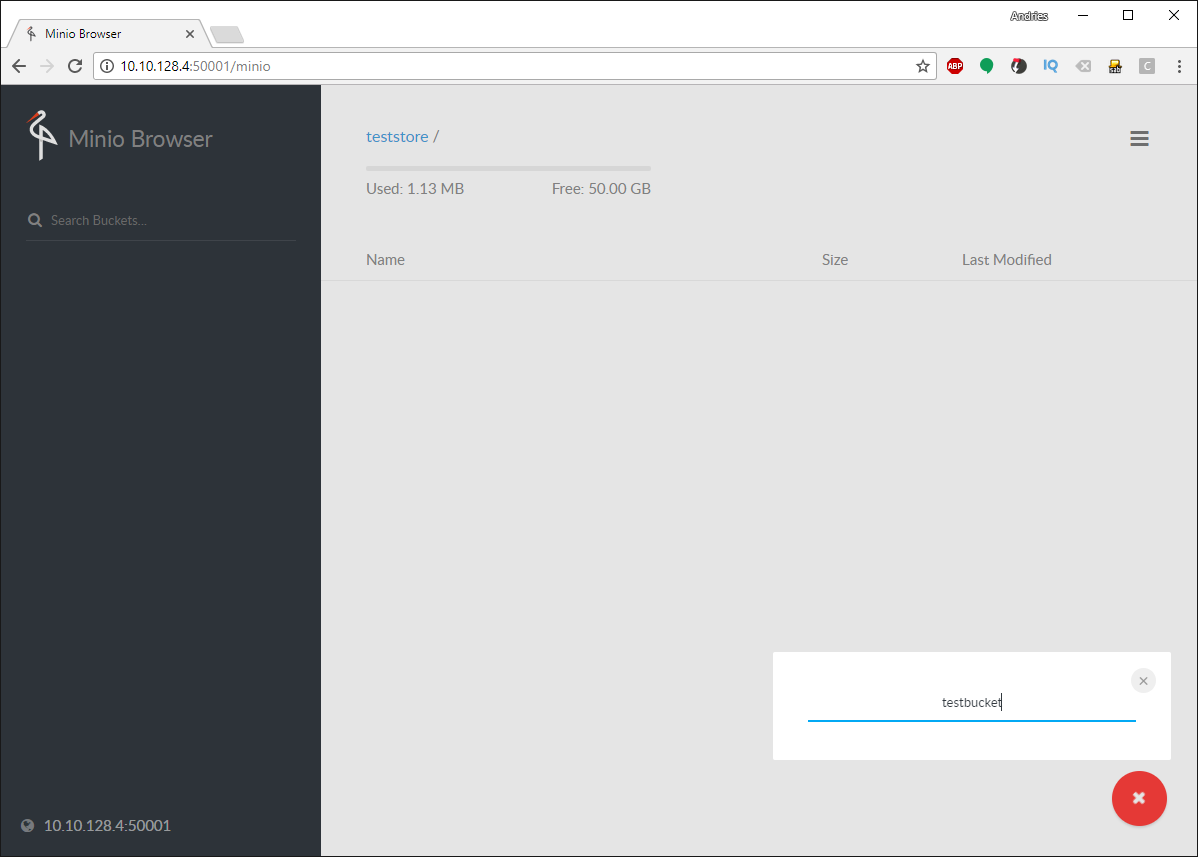

Let’s test creating a bucket and uploading a file. Click the red plus button in the bottom right and click the first yellow icon from the bottom, create bucket.

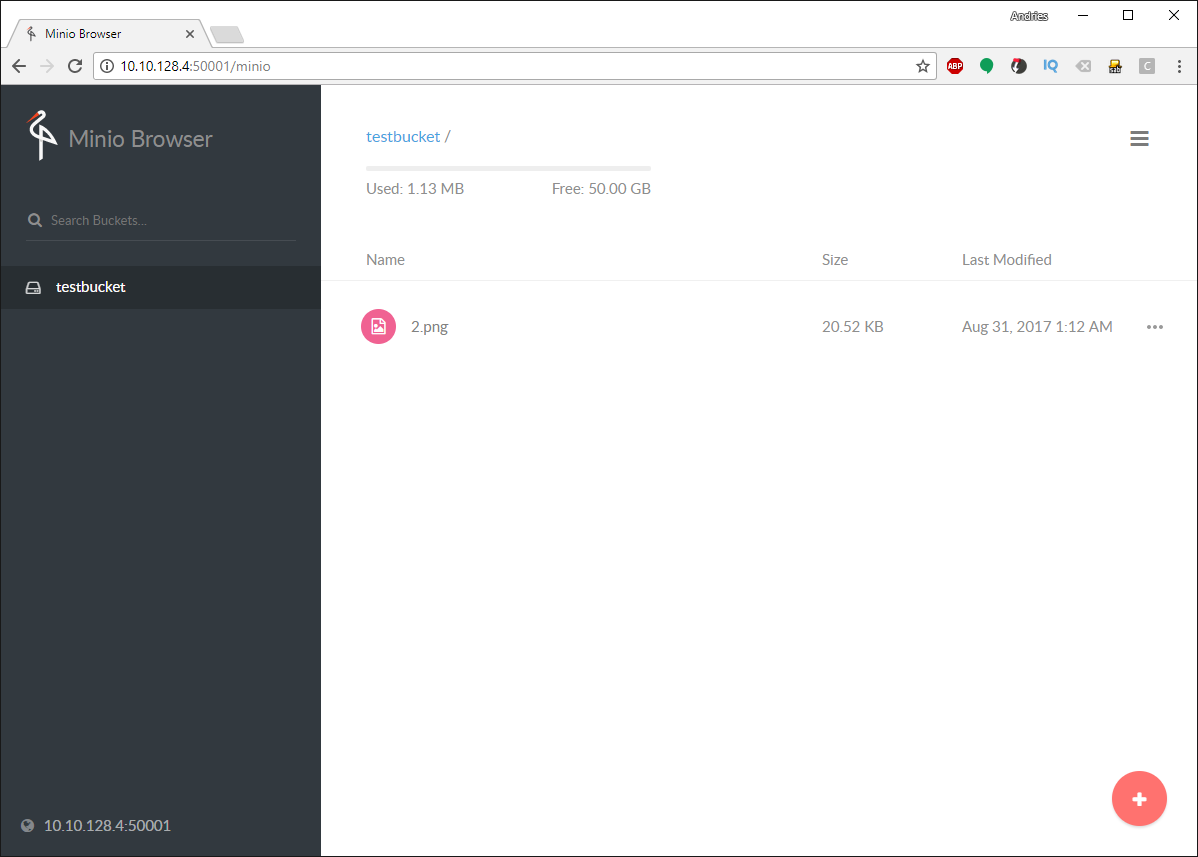

Once done, click on the red icon again and select the second yellow icon to upload a file. Once done, you should see the file in the bucket. Note that the tenant can only see the amount of space we set the quota for while the ZFS store has more free space.

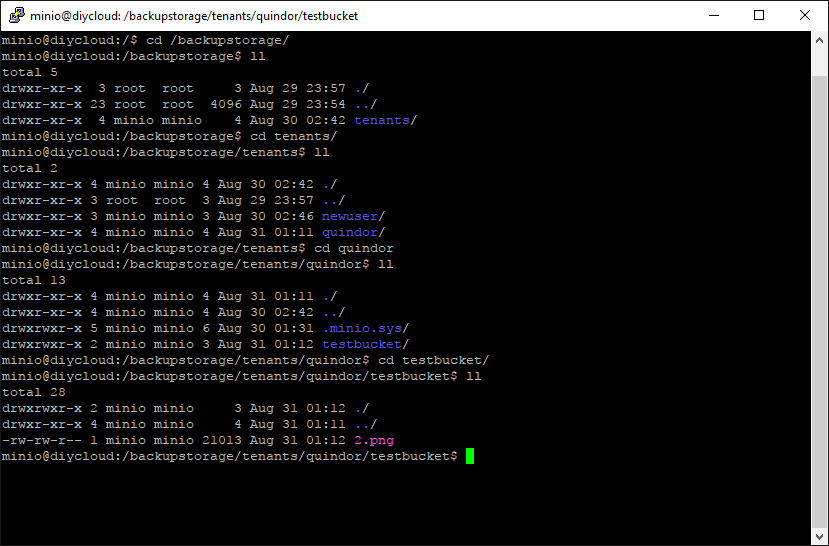

Let’s take a look at how that looks on the files system.

As you can see the Minio server is just an S3 front-end for the ZFS datastore we created and the file is deposited on the file system like any regular file. Using it creates a nice front-end per tenant to create their own buckets and see space left.

It also keeps the setup very portable and flexible. For instance, we’re going to be using Duplicati 2 to write out backups but if the client wants to use another backup tool and it supports writing into an S3 bucket (most do now), they can use whatever tool they prefer.

Autostarting the Minio S3 server(s)

Running the Minio server just in a terminal window is a good way to test it but won’t work if you wish to run this server for a long time. Let’s create some systemd startup scripts to auto-start the Minio server at boot.

Make sure you are logged in as the user you created during the install and not the minio user from before, it does not have the rights to become an admin.

First we need to create the service file:

cd /etc/systemd/system sudo nano minio-quindor.service

Once you have that file open, enter the following details (with your own info in the right spots!)

# Start-up script for Minio storage backend for DIY cloud backup (http://intermit.tech) [Unit] Description=Minio for tenant Quindor [Service] User=minio ExecStart=/opt/minio/minio server --address 0.0.0.0:50001 --config-dir /opt/minio/tenant-configs/quindor /backupstorage/tenants/quindor [Install] WantedBy=multi-user.target

After that’s done, run the following commands to enable the service:

sudo systemctl enable minio-quindor.service sudo systemctl daemon-reload sudo systemctl start minio-quindor.service

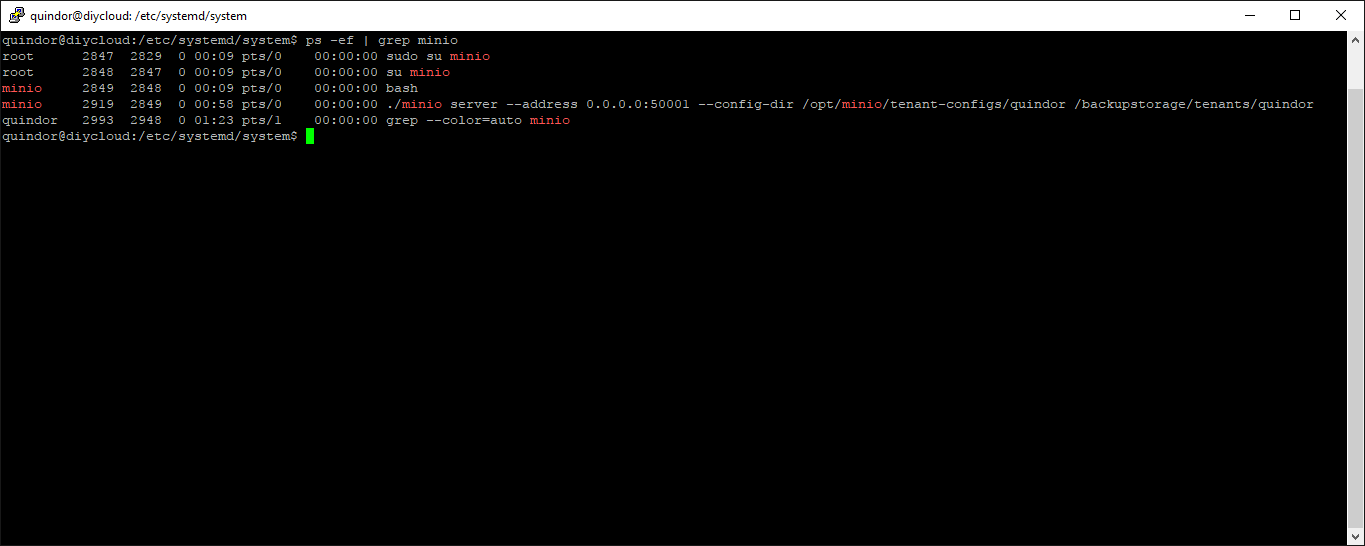

If everything went correctly, the Minio server for the tenant Quindor should be running in the background:

And there it is, PID 2919 is the server we just started using the service. It should automatically start and stop while booting, etc.

Adding a new tenant

Most of you will have figured it out by now but let’s quickly run through all the steps needed to add another tenant.

Create storage

sudo zfs create backupstorage/tenants/seconduser sudo zfs set quota=50G backupstorage/tenants/seconduser

Create needed directories and set permissions

sudo mkdir /opt/minio/tenant-configs/seconduser sudo chown -R minio:minio /opt/minio/tenant-configs/seconduser/ sudo chown -R minio:minio /backupstorage/tenants/seconduser/

Create and start service

sudo cp /etc/systemd/system/minio-quindor.service /etc/systemd/system/minio-seconduser.service sudo nano /etc/systemd/system/minio-seconduser.service *replace all quindor references with seconduser* *replace 50001 port with new unique port (50002 for instance)*

# Start-up script for Minio storage backend for DIY cloud backup (http://intermit.tech) [Unit] Description=Minio for tenant seconduser [Service] User=minio ExecStart=/opt/minio/minio server --address 0.0.0.0:50002 --config-dir /opt/minio/tenant-configs/seconduser /backupstorage/tenants/seconduser [Install] WantedBy=multi-user.target

sudo systemctl enable minio-seconduser.service sudo systemctl daemon-reload sudo systemctl start minio-seconduser.service

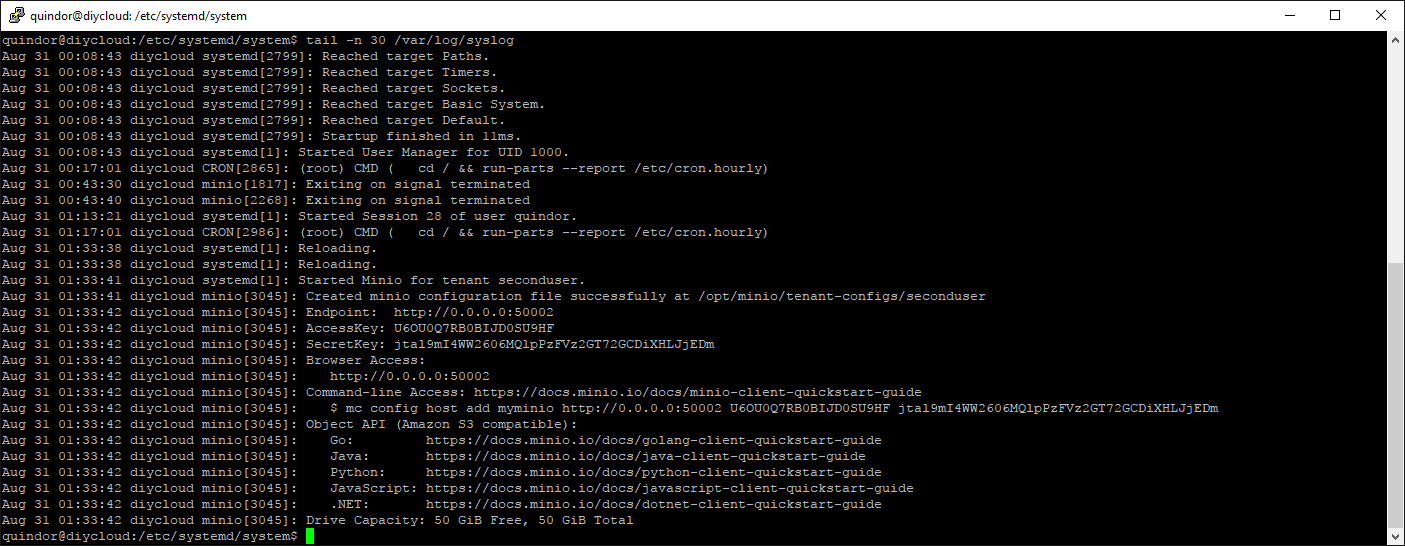

Looking up key for seconduser tenant

Since we started the Minio server directly we don’t know which keys where generated. Use this command to look it up:

tail -n 30 /var/log/syslog

The keys get logged during startup and you need them to provide it to the new tenant, make sure to also include the correct port number for that tenant!

Last step, firewall and DNS configuration

Almost done with configuring the server, we just need to make it accessible over the internet.

Firewall port forwarding

Since we’ll be accessing the Minio S3 storage servers over the internet, you need to configure you’re modem/router/firewall to do port forwarding to the server. Each tenant requires 1 TCP port forwarded so if you are expecting multiple in the future, forwarding a block is easiest like 50001-50020.

Static DNS entry

You also need to make sure that you’re server is reachable behind a DNS name on the internet. Although connecting directly to the IP number will work, if that IP ever changes you would need to update all your tenants and they would have to update all their clients, so setting a static DNS name (even to a dynamic IP) is a smart move!

If you don’t own a domain with which you can create a DNS name, look into using one of the Dynamic DNS providers. Some have linux clients you can install on the server to automatically update the DNS record if the client IP changes!

All done, next up, installing and configuring the client!

And that ends this post about DIY cloud backup, the server chapter. Hopefully I’ve explained everything you need to know to get your own server and Minio instances up and running.

Leave a comment down below what you thought of it and if maybe something needs to be added or changed!

Click here to go to the next post, installing and configuring the client software!

To expand on the great post here, if you are using TLS and/or you want to configure as a Windows service, see https://forum.duplicati.com/t/windows-service-minio-with-ssl-error/527/9 for issue and solution.

Regarding Apollo Lake and Ubuntu 16.04, I have Ubuntu 16.04.3 LTS running on a NUC6CAYH featuring the J3455 CPU.

So I guess the hardware you want to use should cause no problems with Ubuntu 16.04!

Great work! Any chance you would building a downloadable vm (maybe on github) ?

Anders: Just run minio in a docker container and use a volume to save it where you want to!

I have my reasons for doing it my way but yeah you can also run it inside of a container.

Great write up, and a good path forward from CrashPlan!

One question – does Minio need to be proxied behind something handling SSL, or is it safe as-is? I know the files going to/from it are encrypted, but I didn’t know about the rest of the traffic.

Thanks!

In theory it’s safe but you can certainly setup minio or a proxy to connect to it using SSL to add an extra secure layer!

This is wrong to me, user and password are sent unencrypted over the internet. So someone with this data can at least delete stuff. Or I am missing something?

is data encrypted with ssl if using ddns and ip bind for private ip

The data is encrypted on the Duplicati side

I’m just starting out learning about MinIO and looking through your DIY tutorial has been great. I’m trying to break down the steps for my own understanding. Thank you for putting this together.

One thing I’m not getting is the location of the file controlling MINIO_ACCESS_KEY and the MINIO_SECRET_KEY. Per this tutorial, I’m not getting a random generated KEY. It seems All users get assigned a default “minioadmin” for both KEYs

Looking at MINIO docs, I tried looking into the config.json file (/backupstorage/tenants/quindor/.minio.sys/config/config.json ) – However, the KEY is not located there.

Where would I be able to set the “MINIO_ACCESS_KEY” and the “MINIO_SECRET_KEY”

Again, thank you for the great tutorial

N2

I figured it out, I added an Environment variable in the environment file.