I recently had the need to build a 2U Server for home and LAN party usage. Since AMD Ryzen is now offering a very interesting 8 core CPU with plenty of PCIe lanes I decided to use a Ryzen 1700x. The server is running Proxmox and is even using GPU passthrough! This post will host the first video and some configuration details that are harder to convey in a video. A second video and post with more information about some hardware and the GPU passthrough will go up after this.

Content Index:

This build has spawned several articles:

- This Article: about the build hardware and a ZFS storage how-to

- How to quiet the server down using different fans and PWM control

- A how-to on GPU passthrough within Proxmox for running X-split/OBS with GPU encoding and streaming to YouTube/Twitch

(I did another server build but this time in a Fractal Design Core 2300 with a Ryzen 2700, you can check it out here)

*Updates

2018-05-05 To make sure the system is stable, make sure to upgrade the Asus X370-Pro to the latest BIOS and set the option “Power Supply Option” to “Typical current idle”. This will solve stability issues during periods that the server is idle and sometimes freezes.

Build video Time-Lapse

For the build I made a Time-Lapse video which includes lots of information overlays. Make sure it watch it first!

Second video

For this second video I will go a bit deeper into certain topics concerning the server and the GPU hardware passthrough. One the others subjects is the included FAN PCB and getting the server to make a bit less noise!

Used hardware

- Case: Inter-Tech IPC 2U-20255

- Case Power Supply: Inter-Tech FSP500-702UH

- Motherboard: Asus PRIME X370-Pro (Succeeded by the X470-Pro, Amazon)

- CPU: AMD Ryzen 1700x (Succeeded by the 2700(x), Amazon)

- CPU Cooler: Noctua NH-L9a-AM4 (Amazon)

- Memory: Corsair 32GB (2x16GB) 2666 CL16 (Amazon)

- Disks: 4x12TB Seagate IronWolf + 2x 2TB (Amazon)

- SSDs: 2x 1TB Samsung 860 EVO, 1x 512GB Samsung 960 Pro (Amazon 860EVO , 960PRO)

- Network: 1x1Gbit Intel Onboard + 2x1Gbit Intel i350AM + 2x10Gbit SFP+ (Amazon)

- Gigabyte GeForce GTX 1050 OC Low Profile 2G (Amazon)

- ASUS R5230-SL-1GD3-L Radeon R5 230 1GB GDDR3 (Amazon)

The 2x1Gbit Intel i350AM is a special PCIe x1 variant because all the other slots where full, you can check it out using the link. You can pick up a cheap Intel 10Gbit SFP+ card there too.

You can find the Molex to 5x SATA cable here, or a variant from SATA to 5x SATA here.

If you don’t have enough SATA data cables, pick up some of these nicely nylon sleeved ones.

Used software

The system is running on Proxmox VE 5.1. The install you saw during the video was done from a USB stick and everything works out of the box. At the time of writing it was important to enable the testing repository to get a new version kernel for the GPU passthrough.

Proxmox is a virtualization GUI around KVM and LXD/LXC running off a Debian base. It has ZFS natively integrated and offers advanced cluster functionality for running virtual machines and containers. They have a community edition you can run for free! 🙂

Some more information about configuration within Proxmox and GPU passthrough will be detailed in another post.

ZFS configuration

A lot of people asked me how I configured the ZFS pools so here is a small walkthrough. This is by no means a comprehensive guide to ZFS but mainly what I did to create the storage for this server.

Storage hardware

The board has 8xSATA-600 and 1x M.2 NVMe PCIe 3.0 x4 connectors. In this build, I’m using them all.

- 1x NVMe (PCIe 3.0 x4) Samsung 960 Pro 512GB

- 2x SATA-600 Samsung 860 EVO 1TB

- 4x SATA-600 Seagate Ironwolf 12TB

- 2x SATA-600 Seagate 2TB

The only expansion option that is left is replacing the 2x2TB drives with another pair of 12TB drives if needed in the future.

ZFS configuration

The ZFS configuration only consists of mirrors.

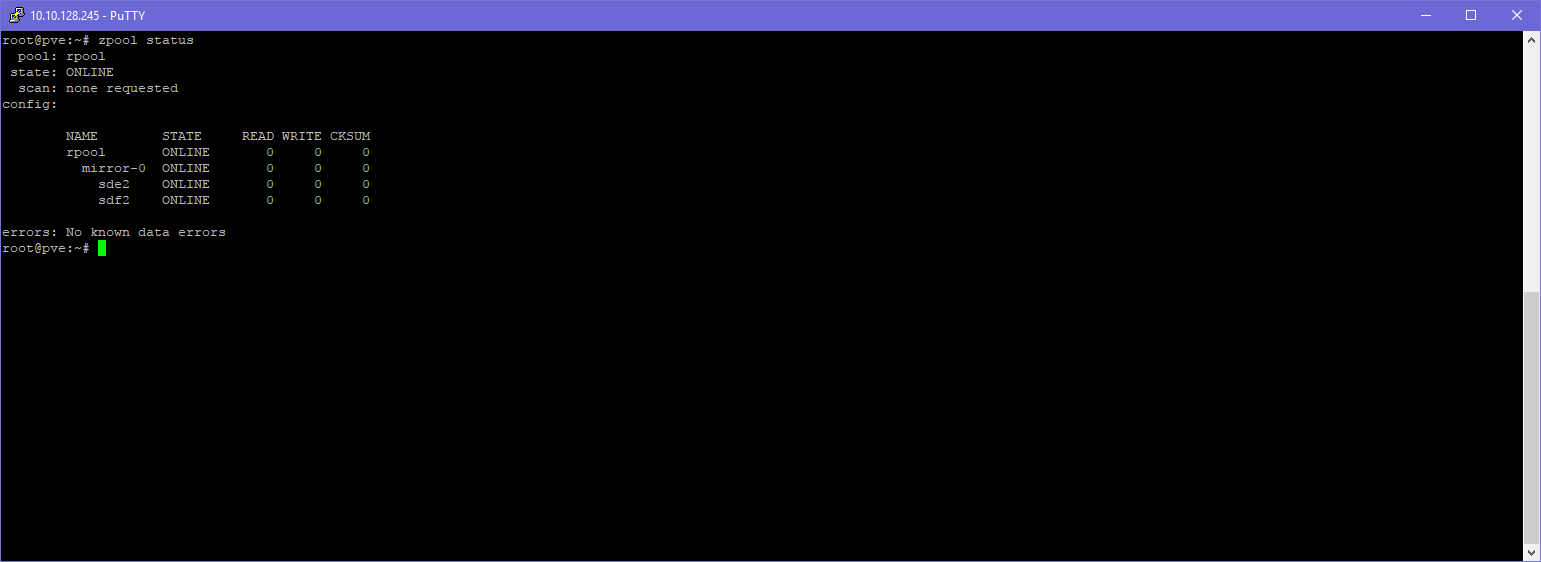

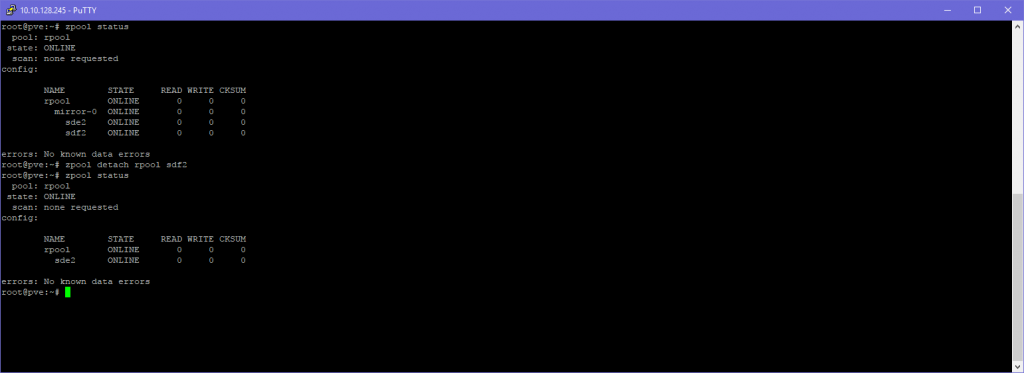

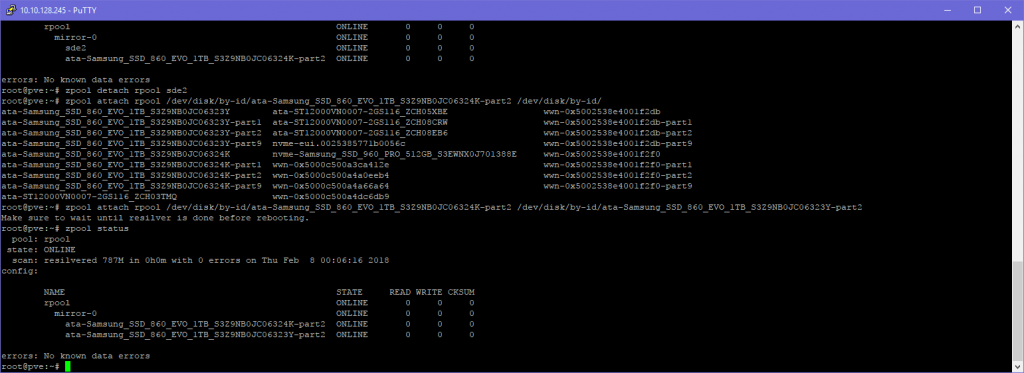

Fixing the Proxmox Boot/VM/Container mirror

During the installer I opted to use the 2x 1TB SSD’s on a mirror. This should provide plenty of performance and security in the case of a hardware failure.

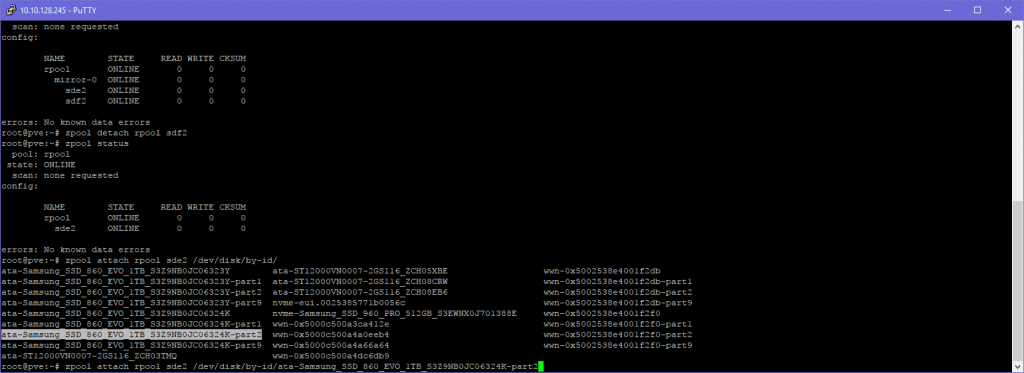

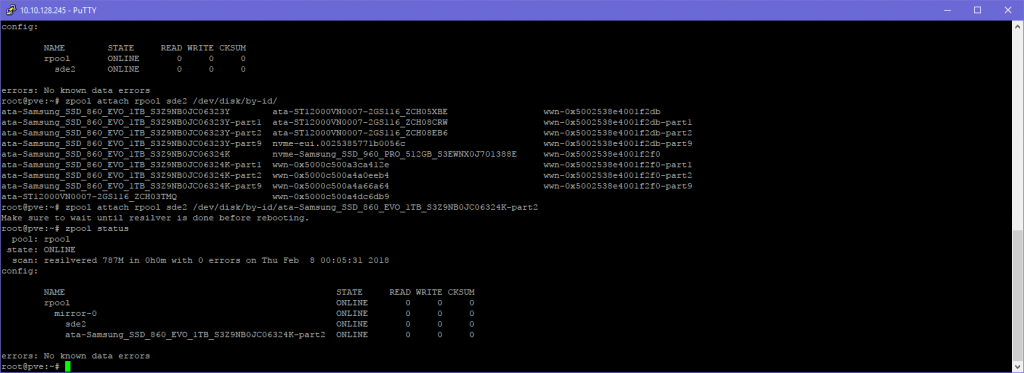

Out of the box, the proxmox installer creates these using drive links such as /dev/sdb, /dev/sdc, etc.. I don’t really like that approach and much rather use /dev/disk/by-id links since when a change occurs in controllers, drive order or even hosts that won’t ever change.

So the first thing to do is fix this.

I did that using the following commands, I’ll illustrate with screenshots:

And with that you are using (in my opinion) the correct way to connect your disks in ZFS! Now, even when you change drive order, controller or even hosts, it should be able to find all the disks.

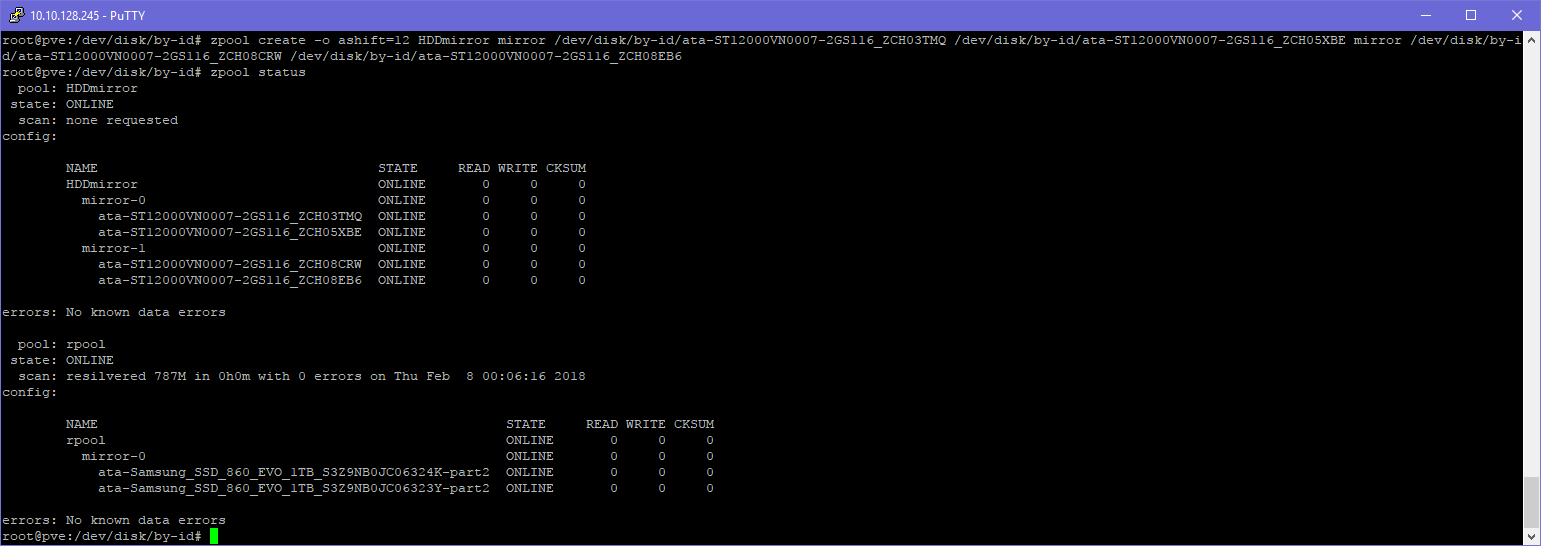

Creating the 4x12TB Pool

For mass data storage I decided to use the 4x12TB in one pool with two mirrors. That makes the most amount of sense with this disk configuration. Why?

- RAIDz1 would give me more space but random I/O performance of one disk. Rebuilding with this amount of data is sketchy at best, so avoid

- RAIDz2 would give me the same amount of space as mirrors but random I/O performance of one disk. Sequential and redundancy should be fine

- 2 Mirrors in one pool gives me the random I/O of two disks and still great sequential performance and redundancy. It also doesn’t need any parity calculations so save on CPU usage

The way to configure this is the following:

zpool create -o ashift=12 hddmirror mirror /dev/disk/by-id/disk /dev/disk/by-id/disk mirror /dev/disk/by-id/disk /dev/disk/by-id/disk

Setting compression

After creating the pool I advise to enable LZ4 compression. It works wonders on modern hardware, hardly costing any CPU and saving precious space where possible! Even when incompressible files are written it has a mechanism which gives up on compressing those files. It also changes how ZFS access the disk making things a bit more efficient in most cases.

zfs set compression=lz4 hddmirror

And that’s the pool done!

Creating the 2x2TB Pool

The 2x2TB disks where added in the same way to a different pool. Although ZFS can handle different disk sizes as mirrors within the same pool I am going to use the disks for different purposes and wanted to seperate the I/O.

Add Cache (L2ARC) and/or write “cache” (ZIL/SLOG)

Why the need for cache?

I added the Samsung 960 PRO to use as a L2ARC for the server. During LAN parties a video team will be dumping footage and then use those files directly from several PC’s to edit videos. This requires quite a bit of speed to make it work fluently for everyone at the same time.

In part, this is why I added the 10 Gigabit network cards. But with “only” 4x12TB disks which do about 200MB/sec on average that would only give me 400MB/sec on writes and 400 to 600MB/sec on reads maximum. Although those numbers aren’t bad, those are for pure sequential transfers, if multiple people are hitting the pool at the same time, you won’t reach those numbers.

Adding L2ARC can alleviate some of the load on the disks. It will provide another source where it can get the blocks you are requesting.

CONS: Yes, adding L2ARC will cost you some memory from ARC (the in-memory cache) but in my case I believe that trade off to be the correct one

Why the Samsung 960 PRO and using it for multiple pools

I chose the Samsung 960 PRO because of its performance using a single queue depth and because it uses MLC memory instead of TLC so can handle writes and endurance a bit better.

Because this SSD is connected using PCIe 3.0 x4 it has about 4000MB/sec of bandwith and the specs of this SSD say it can deliver about 3500MB/sec read and 2100MB/sec write. Now it won’t do that during L2ARC/SLOG duty but I’m hoping it will come closer to saturating the 10Gbit or providing more I/O in conjunction with the disks then just the disks alone.

Cons:

- It’s no Enterprise SSD so it doesn’t have a giant endurance but still a lot better then any generic SSD.

- It lacks any form of power protection. So using it as an ZIL/SLOG device is debatable.

If you are a ZFS purist, this device cannot be used as an ZIL/SLOG device. In my opinion, unless you go the whole route with ECC memory, a UPS and/or disable disk write caches, etc. not having power loss protection on your SSD will be the last of your problems. But, decide for yourself how important the last few seconds of data are for you!

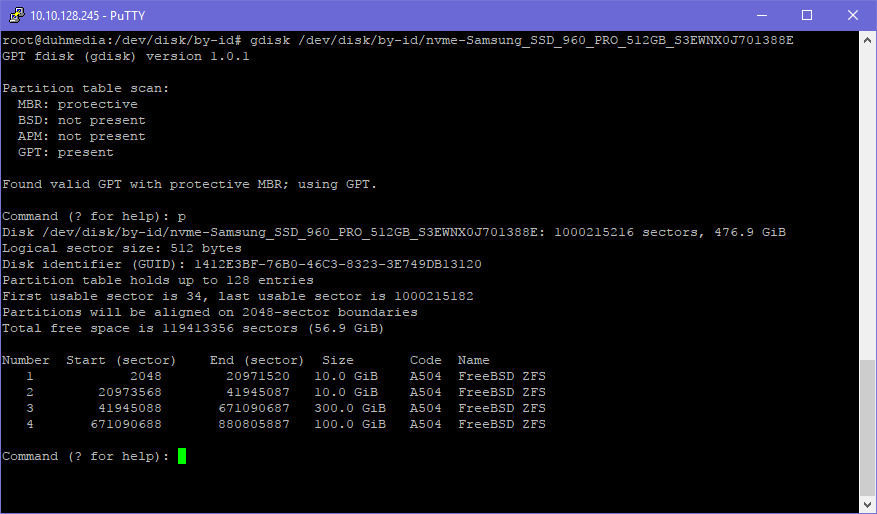

Nevertheless, I have appointed some space on the SSD so I can play around with an ZIL/SLOG. Therefor I’m using the following partitions on it.

Total Size: 512GB

- 10GB Potential ZIL for 12TB HDDs

- 10GB Potential ZIL for 2TB HDDs

- 300GB L2ARC for 12TB HDDs

- 100GB L2ARC for 2TB HDDs

-50GB overprovision

Creating the partitions for L2ARC/ZIL

To create the partitions I use a tool called “gdisk”, using the following line:

gdisk /dev/disk/by-id/nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E

To create the first partition hit

n, 1, +10G, A504

Continue those commands to create all the partitions. After that you should see something like the following:

Adding a Cache to a pool

To add a cache to a pool you run the following command:

zpool add hddmirror cache /dev/disk/by-id/nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E-part3

root@duhmedia:/etc/modprobe.d# zpool status

pool: hddmirror

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Sun Feb 11 00:24:43 2018

config:

NAME

hddmirror

mirror-0

ata-ST12000VN0007-2GS116_ZCH03TMQ

ata-ST12000VN0007-2GS116_ZCH05XBE

mirror-1

ata-ST12000VN0007-2GS116_ZCH08CRW

ata-ST12000VN0007-2GS116_ZCH08EB6

cache

nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E-part3

Adding a ZIL/SLOG to a pool

To add a ZIL/SLOG (see warnings above!) to a pool, run the following command:

zpool add hddmirror log /dev/disk/by-id/nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E-part1

root@duhmedia:/etc/modprobe.d# zpool status

pool: hddmirror

state: ONLINE

scan: scrub repaired 0B in 0h0m with 0 errors on Sun Feb 11 00:24:43 2018

config:

NAME

hddmirror

mirror-0

ata-ST12000VN0007-2GS116_ZCH03TMQ

ata-ST12000VN0007-2GS116_ZCH05XBE

mirror-1

ata-ST12000VN0007-2GS116_ZCH08CRW

ata-ST12000VN0007-2GS116_ZCH08EB6

logs

nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E-part1

cache

nvme-Samsung_SSD_960_PRO_512GB_S3EWNX0J701388E-part3

Raising L2ARC fill rate

Because I want the cache to have parts of most of the current media files on it, I raised the L2ARC fill rate setting. Normally this setting is set conservatively to only cache much used blocks over a long period of time and not sequential files so the SSD won’t be trashed too much with writes.

If you should change this setting and if it will be beneficial for you really depends on your usage, so use it with caution! It will cause a lot more writes on your SSD and might not give you better performance!

To change these values you need to create a new file in /etc/modprobe.d. You can use the following commands:

echo options zfs l2arc_noprefetch=0 > /etc/modprobe.d/zfs.conf echo options zfs l2arc_write_max=524288000 >> /etc/modprobe.d/zfs.conf echo options zfs l2arc_write_boost=524288000 >> /etc/modprobe.d/zfs.conf

After this you need to perform a:

update-initramfs -u

to make sure the values get loaded during booting. When that’s done, reboot the system. You can check the new values in the files located in: /sys/module/zfs/parameters

This sets the L2ARC to a max write speed of 500MB/sec and to cache everything from single blocks to sequential reads.

Again, I can’t warn enough about this, these settings might be completely wrong for your installation and use case and might actually make performance worse and kill your SSD sooner, use with caution, do your own research!

End of this part

As written above, I’m going to make a second blog post talking a bit more about Proxmox and using GPU passthrough together with a video showing some hardware features and how to make this server quieter then it comes out of the box.

If you have any questions or comments, let me know down below!

hi quindor,

i like to ask why you need this many ports?

Network: 1x1Gbit Intel Onboard + 2x1Gbit Intel i350AM + 2x10Gbit SFP+

can’t you use the port on the motherboard for all the vms or use a software router e.g pfsense or opnsense?

also I forgot to add, i like to ask if your are going to run this 24/7? and why you chose consumer parts instead of server parts?

Price and noise, but mostly price. Getting a server-grade pre-built often costs 1.5x to 2x of the cost of building it yourself with desktop components like I did. There is no denying there are reasons for server hardware to exist but the environments I run these machines in, it’s no problem if after running for a while I need to replace a component which might cause downtime, etc.

Well, for me, they all have a different purpose:

Onboard 1Gbit: Static IP in my home and direct management on a LANparty

2x1Gbit-1: Static IP in our Camera VLAN

2x1Gbit-2: Static IP in our Management VLAN/MISC if needed

2x10GbitSFP+-1: LANparty IP for main users (File sharing for Mediateam)

2x10GbitSFP+-2: LACP with port 1 for redundancy (linked to secondary network core)

So they all have a purpose. Sometimes it’s just convenience though, you could of course run all VLANs over the same port, etc. but that would take more configuration and cost extra time each time we need to change it for that LANparty.

thank you for sharing your thought, I think I’m going to build using desktop components like you. I think its more practical also.

are you going to bridge the ports to the virtualized system in proxmox?

can you add guides on your virtualized system like do you add firewall in proxmox?

and how do you access the windows for gaming? do you rdp?

It would be nice to know what vms you installed and their use at the lan party. Did you run a sream cache …. ? Etc.

This server specifically is for running media related tasks such as some file storage containers (hosting SMB/CIFS, NFS, FTP, etc.) which use the host ZFS storage with a passthrough. The shared folders are mostly for our media time which has several members live editing and dumping footage with it. Works surprisingly well, I have several Turnkey containers which don’t have any local storage other than the boot drive and then passthrough ZFS mounts, I was able to get speeds of 500MB/sec+ no problem.

Next to that it’s also running a Linux VM for our camera system NVR (again using the ZFS storage) with about 16 to 20 cameras and a Windows VM for running OBS which live streams to the internet (Encoding using GPU passthrough).

It might run some more services in the future if needed, but this is basically our media machine.

We do run things like Infoblox DHCP/DNS and other services but those are currently still on other virtualization servers. We also have a giant steam cache (~50TB) but because of the performance needed with 1200+ simultaneous attendees it’s hosted on another physical machine with a truck load of disks for the IOps and mutli-10Gbit access.

Can I ask you what software are you using for surveillance?

Also installing on ssd (860pro) caused a massive write and wearout for me. Ike 1% every week only running proxmkx, Looking around I resolved disabling 2 service used by cluster, obviously I cannot clustering this way. How did you manage this?

Why did you not choose for Optane instead of the 3x SSD’s?

It is faster and has longer lifetime?

Although I would have loved to use Optane in this build, sadly, they are much more expensive.

I wanted to have a mirror for the boot and VM drive so that’s what I use the 2x1TB for. For the cache drive an Optane module would have made a perfect fit but the nice versions are quite expensive and the cheaper versions are too slow when using 10Gbit. So a 960 PRO was a little bit of a compromise but should still last a while even with a lot of writes (that’s why it’s the PRO, not the EVO). Especially because this server has been built to use at events and thus the disks won’t be writing much between the events and thus save the NAND flash a bit.

I was wondering what better options would I have with the ASUS Z-370 PRIME PRO Board. Can you elaborate please.

One of the best guides around about configuring things before proxmox installation.

Even though my purpose/project for using proxmox is way different than yours (I want to emulate the working environment at work which consists of a serv 2012 r2 win 7/10 clients and 2 mac OS X ) still I d like to know if you can help me about initial instalaltion.

First of all my setup consists of a Dell Precision based around x 79 socket and c600 intel chipset with 64gb ecc memory 2 xeon E2667 if I can recall correctly a perc H310 controller which I unplugged it at first place because I ve read about not being able to pass trim commands. Then again the onboard intel sas controller (pci id 8085:1d6b), I m not sure that it can pass through the disks to the zfs acting as a simple hba controller) The OS is going to be stored in an 128gb ssd and have 3 more 480gb ssd’s for data meaning ISO/VMs Containers and such.

According to all the above configuration which do you think would be the best possible way to set up the drives. I initially started with ext4 for OS which lost a 10-15% of space and couldnt calculate where is being placed as partioned or unpartitioned space and also created a swap file of only 1.75gb which is the opposite of what I ve read it should be in size ()i mean too small. Is it true that using zfs for the OS doesnt create swap space at all? Would I have any benefits from using zfs instead of ext4 for OS installation?

I am not even at step 2 setting up the 3 ssd’s as a kind of software raid (with 3 same ssd’s what do you think would fit best) and I ve already read many doc’s/guides in forums, also videos but instead of the scenery to clear up it becomes more and more blurry….

Thank you in advance

I’m not really sure I’m completely following, but I would use the 128GB SSD as a single ZFS disk (mainly because it’s easy to use) and then configure the 480GB SSD’s in something like a RAIDz1 if you value the data or otherwise just a normal span/stripe.

More advanced is that you can leave the 128GB SSD out and work with partitions so you can run a boot mirror and again use the RAIDz1 (with a bit less space) if you want redundancy for your boot partition also.

By default proxmox does not seem to create a swapfile that’s correct. Because of that you should not overcommit the memory!

I also don’t follow your USB question, if you want to install Proxmox onto it, don’t, it will die. If you want to use it as an extra store you can define so in the GUI.

Forgot to ask in my previous post … how would I be able after the installation of the OS to a usb flash (lets say 16gb in ext4 or zfs file system which do you think would be best) to transfer/re map or however it is called the lvm and lvm-thin from the usb which are created automatcally by default to the disks which have been used for this reson (to have space for vm’s iso’s containers … etc)

Can it be done via webui or cli somehow?

Thank you

Looking for such great article is too hard, gladly to have found your blog. Keep it up! thanks for sharing. Greetings from activesystems.ph by the way.